What’s so special?

Ever forgot to breathe? When was the last time you told your heart to beat? Remember the sinew you strechted when picking up your glas of water the last time? Did you feel it?

So i don’t know about you, but when i go to a sauna, i always tell all my individual sweat pores, to “go on let it ooouuut, you are freee”! When i run i decide to breath faster and manually increase my blood oxigen level, oh and of course i get a crush on someone when i consciously decide to do so!

Ok, let’s be honest now 😉 We all were born with personal assistants: Our subconscious mind!

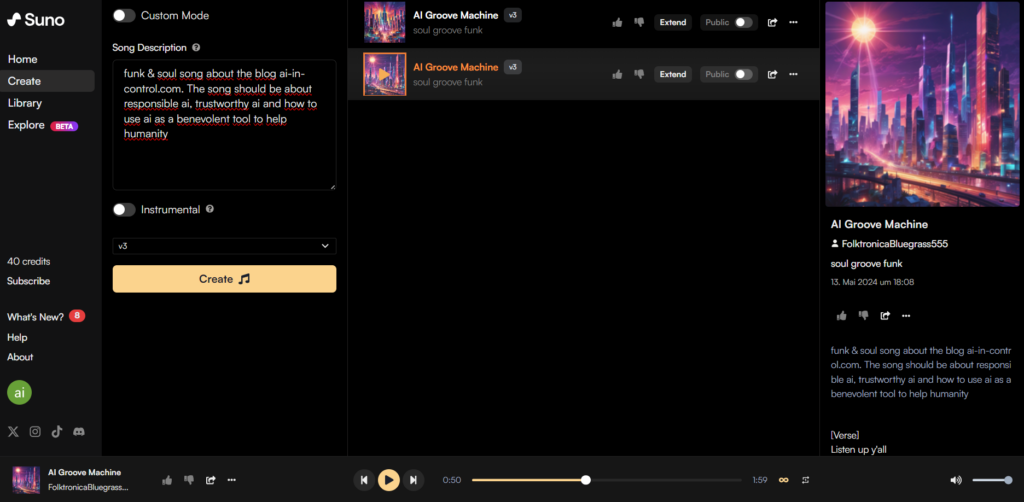

GoGo Gadget: ChatGPT

Q: How much faster is the subconscious compared to the conscious mind? How much more information can it process in a second?A: The subconscious mind processes information significantly faster than the conscious mind. While the conscious mind can handle around 400 bits per second (bps), the subconscious mind likely processes a whopping 27,500 times more data. To put this in perspective, the brain takes in approximately 11 million bits per second through the senses, but conscious activities like reading or playing the piano max out at fewer than 50 bps. So, most of the 11 Mbps is processed subconsciously, making the subconscious a data-processing powerhouse! - 1.

1

https://thinkbynumbers.org/psychology/subconscious-processes-27500-times-more-data-than-the-conscious-mind/Q: Are you sure? Please, be a bit more critical!A: The claim that the subconscious mind can process up to 40 million bits of information per second is a common one in popular psychology and self-help literature. However, it's important to note that the exact capacity of the subconscious mind is not well-defined and can vary depending on how information processing is measured.

The human brain is incredibly complex, and while the subconscious mind plays a significant role in processing information and influencing our thoughts, feelings, and behaviors, it is difficult to quantify its processing capacity in terms of bits per second.

The notion of the subconscious mind processing massive amounts of information in parallel at such high speeds is often based on theories and interpretations rather than concrete scientific evidence. While the subconscious mind is undoubtedly powerful and capable of processing information outside of our conscious awareness, claims about specific processing capacities should be approached with caution.

Research in cognitive psychology and neuroscience continues to shed light on the workings of the subconscious mind, but as of my last knowledge, there isn't a definitive answer regarding the exact processing capacity of the subconscious mind in terms of bits per second.Wether our subconscious mind is 27.500 times faster or just a few hundred times faster then our conscious mind is not important here. However i think we can conclude, that we all were born with a very powerful personal assistant.

The main goal of this personal assistant is to keep us alive and to do so with minimal energy investment.

Stimulus-Organism-Response (S-O-R) model

The Stimulus-Organism-Response (S-O-R) model was proposed by Robert S. Woodworth in 1929 as an expansion of Ivan Pavlov’s classic theory of the stimulus-response mode.

GoGo Gadget: ChatGPT

Q: Please, Explain the S-O-R modelA: Certainly! The S-O-R model stands for Stimulus-Organism-Response. It’s a conceptual framework in psychology that describes how individuals respond to external stimuli. According to this model, an external stimulus triggers a reaction in an organism, often without the need for conscious thought. In other words:

Stimulus: The external event or input.

Organism: The individual’s internal state, including emotions, thoughts, and physiological processes.

Response: The observable behavior or reaction. The S-O-R model helps us understand the what, why, and how of our behavior - 1.

1

https://researchleap.com/the-stimulus-organism-response-s-o-r-paradigm-as-a-guiding-principle-in-environmental-psychology/Please, imagine the following scenario: You are driving in a car at around 180 km/h on the highway on the left (fast) lane. You are relaxed, your car handles the speed quite graciously. All of the sudden another – much slower – car in the middle lane cuts you off, he switches to the left lane right in front of you.

With all the power you have you put your foot on the brake pedal.

You get enraged, you raise your hand and push the horn on your steering wheel with the other one. Your heart is racing.

The Stimulus was the other car cutting you off.

Your Response, in this purely imagitive situation, was braking hard, screeming loud and making the other know that he is in the wrong!

What happend inbetween tho? What was your “Organism” doing? Since this question is much harder to answer, the Organism also get’s called the black box.

Paradigm Shift – GPT4o – “omni”

With the newly announced model GTP4o OpenAI paved the way for the paradigm shift from passive AI assistants, that help you when you ask them, to proactive AI assistants. The latter will give us advice unaskedly. They will observe what we observe, they will hear what we hear and they will see how we interact. Based on their observation they can give us guidance and tips that may or may not help us.

Iron Man – J.A.R.V.I.S

Ray-Ban Meta Smartglases

Back to the Now. Currently stylish Ray-Ban Meta Smartglases with following functionalities are available to buy:

- Capture

- Voice Commands

- Call

- Listen

- Live-Stream

It’s not hard to see, that very soon a personal assistant to the likes of J.A.R.V.I.S will be available to many people. The question is, are we ready?

What will you do?

Considering all of this. How will you use your upcoming personal proactive AI assistant? Let me know in the comments of this article!